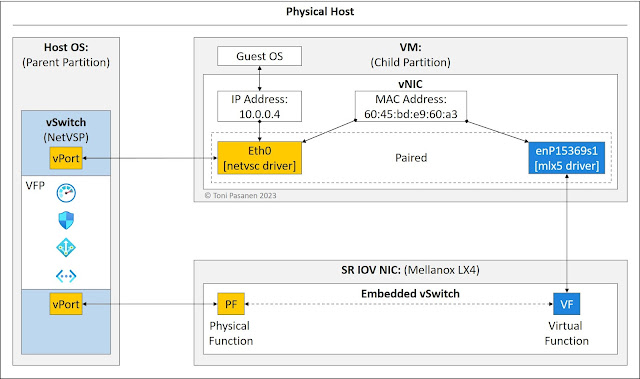

Before moving to the Virtual Filtering Platform (VFP) and Accelerated

Network (AccelNet) section, let’s look at the guest OS vNIC interface

architecture. When we create a VM, Azure automatically attaches a virtual NIC

(vNIC) to it. Each vNIC has a synthetic interface, a VMbus device, using a

netvsc driver. If the Accelerated Networking (AccelNet) is disabled on a VM,

all traffic flows pass over the synthetic interface to the software switch.

Azure hosts servers have Mellanox/NVIDIA Single Root I/O Virtualization

(SR-IOV) hardware NIC, which offers virtual instances, Virtual Function (VF),

to virtual machines. When we enable AccelNet on a VM, the mlx driver is

installed to vNIC. The mlx driver version depends on an SR-IOV type. The mlx

driver on a vNIC initializes a new interface that connects the vNIC to an

embedded switch on a hardware SR-IOV. This VF interface is then associated with

the netvsc interface. Both interfaces use the same MAC address, but the IP

address is only associated with the synthetic interface. When AccelNet is

enabled, VM’s vNIC forwards VM data flows over the VF interface via the

synthetic interface. This architecture allows In-Service Software Updates

(ISSU) for SR-IOV NIC drivers.

Note! Exception

traffic, a data flow with no flow entries on a UFT/GFT, is forwarded through VFP

in order to create flow-action entries to UFT/GFT.

Figure 1-1: Azure Host-Based SDN Building Blocks.

The output of the Linux CLI dmesg command in the following four examples

shows the interface initialization processes when a Linux VM comes up. A Hyper-V

VMBus driver (hv_vmbus) provides a logical communication channel between the

child partition running a guest OS and the parent partition running a host OS.

First, the hv_vmbus driver detects and registers a hv_netvsc driver for the

synthetic interface eth0. The NetVSC (Network Virtual Consumer) driver is used

for redirecting requests from VM to NetVSP (Network Virtual Service Provider)

driver running on a parent partition. Note that the Globally Unique Identifier

(GUID) assigned to ethe0 is derived from the vNIC MAC address

60:45:bd:e9:60:a3.

root@vm-one-sdn-demo:/home/azureuser# dmesg

hv_vmbus: Vmbus version:5.3

hv_vmbus: registering driver hv_netvsc

hv_netvsc 6045bde9-60a3-6045-bde9-60a36045bde9

eth0: VF slot 1 added

Example 1-1: dmesg output – netvsc registration.

Next, the hv_vmbus driver registers a hv_pci driver, a

prerequisite for the VF interface detection and initialization process. Azure

assigns a GUID for hv_pci, in which bytes 5-6 are used as PCI bus domain id

(3c09 in our example). After hv_pci driver registration, it probes and

initializes PCI Bus with PCI domain id 3c09 with reserved bus numbers 00-ff.

The PCI device with id 10 (binary representation of hex 02) is assigned to bus

00. The HEX 15b3:106 within square brackets defines vendor and device type. In

our example 15b3 = Mellanox/NVIDIA, and 1016 = MT27710 Family [ConnectX-4 Lx

Virtual Function], 10/25/40/50 Gigabit Ethernet Adapter.

hv_vmbus: registering driver hv_pci

hv_pci bad89801-3c09-4072-9182-76209ebdac21:

PCI VMBus probing: Using version 0x10004

hv_pci bad89801-3c09-4072-9182-76209ebdac21: PCI host

bridge to bus 3c09:00

pci_bus 3c09:00:

root bus resource [mem 0xfe0000000-0xfe00fffff window]

pci_bus 3c09:00: No busn resource found for root bus,

will use [bus 00-ff]

3c09:00:02.0: [15b3:1016] type 00 class 0x020000

pci 3c09:00:02.0: reg 0x10: [mem

0xfe0000000-0xfe00fffff 64bit pref]

pci 3c09:00:02.0: enabling Extended Tags

pci 3c09:00:02.0: 0.000 Gb/s available PCIe bandwidth,

limited by Unknown x0 link at 3c09:00:02.0 (capable of 63.008 Gb/s with 8.0

GT/s PCIe x8 link)]

Example 1-2: dmesg – PCI bus initialization process.

The mlx5_core driver initializes a VF during the VM bootup process.

mlx5_core 3c09:00:02.0: enabling device (0000 -> 0002)

mlx5_core 3c09:00:02.0: firmware version: 14.30.1224

mlx5_core 3c09:00:02.0: MLX5E: StrdRq(0) RqSz(1024)

StrdSz(256) RxCqeCmprss(0)

Example 1-3: dmesg – VF initialization.

The hv_netvsc driver, used by a synthetic interface eth0, detects and bonds a new VF interface with the eth0. After noticing the eth1-to-eth0 bonding, the mlx5_core renames the VF interface from eth1 to enP15369s1 and brings the interface up. When the VF interface to the hardware NIC is up, eth 0 starts forwarding data via the VF interface (fast path) instead of vPort on the software switch (slow path).

hv_netvsc 6045bde9-60a3-6045-bde9-60a36045bde9 eth0:

VF registering: eth1

mlx5_core 3c09:00:02.0 eth1: joined to eth0

mlx5_core 3c09:00:02.0 enP15369s1: renamed from eth1

mlx5_core 3c09:00:02.0 enP15369s1: Link up

hv_netvsc 6045bde9-60a3-6045-bde9-60a36045bde9 eth0:

Data path switched to VF: enP15369s1

Example 1-4: dmesg – VF Interface to Synthetic

Interface bonding.

Example 1-5 shows how we can verify PCI device ids, vendor:device, and its driver.

azureuser@vm-one-sdn-demo:~$ cat /proc/bus/pci/devices | cut -f1-2,18

0010

15b31016 mlx5_core

Example 1-5: PCI Device Id, Vendor/Device, and Driver Verification.

Example 1-5 shows how we can verify PCI device ids, vendor:device, and its driver. Example 1-6 displays that synthetic interface eth0 and VF interfaces enP15369s1 use the same MAC address, while the IP address 10.0.0.4 is associated only with the interface eth 0.

azureuser@vm-one-sdn-demo:~$ ifconfig

enP15369s1:

flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500

ether 60:45:bd:e9:60:a3 txqueuelen 1000 (Ethernet)

RX

packets 6167 bytes 7401002 (7.4 MB)

RX

errors 0 dropped 0 overruns 0

frame 0

TX

packets 78259 bytes 19889370 (19.8 MB)

TX

errors 0 dropped 0 overruns 0 carrier 0

collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet

10.0.0.4 netmask 255.255.255.0 broadcast 10.0.0.255

inet6

fe80::6245:bdff:fee9:60a3 prefixlen

64 scopeid 0x20<link>

ether 60:45:bd:e9:60:a3 txqueuelen 1000 (Ethernet)

RX packets

60618 bytes 22872367 (22.8 MB)

RX

errors 0 dropped 0 overruns 0

frame 0

TX

packets 74548 bytes 19642740 (19.6 MB)

TX

errors 0 dropped 0 overruns 0 carrier 0

collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6

::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX

packets 146 bytes 17702 (17.7 KB)

RX

errors 0 dropped 0 overruns 0

frame 0

TX

packets 146 bytes 17702 (17.7 KB)

TX

errors 0 dropped 0 overruns 0 carrier 0

collisions 0

Example 1-6: vNIC Interface Configuration.

Example 1-7 shows that the VF interface enP15369s1 (slave) is bonded with the eth 0 (master).

azureuser@vm-one-sdn-demo:~$ ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc

noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu

1500 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether

60:45:bd:e9:60:a3 brd ff:ff:ff:ff:ff:ff

3: enP15369s1: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP>

mtu 1500 qdisc mq master

eth0 state UP mode DEFAULT group default qlen 1000

link/ether

60:45:bd:e9:60:a3 brd ff:ff:ff:ff:ff:ff

altname

enP15369p0s2

Example 1-7: Interface Bonding Verification.

The last example 1-8 verifies that the eth 0 interface sends and receives traffic from the VF interface.

azureuser@vm-one-sdn-demo:~$ ethtool -S eth0 | grep '

vf_'

vf_rx_packets: 564

vf_rx_bytes: 130224

vf_tx_packets: 61497

vf_tx_bytes: 16186835

vf_tx_dropped: 0

Example 1-7: Interface Bonding Verification.

References:

No comments:

Post a Comment