Introduction

To achieve active/active redundancy for a Network Virtual Appliance (NVA) in a Hub-and-Spoke VNet design, we can utilize an Internal Load Balancer (ILB) to enable Spoke-to-Spoke traffic.

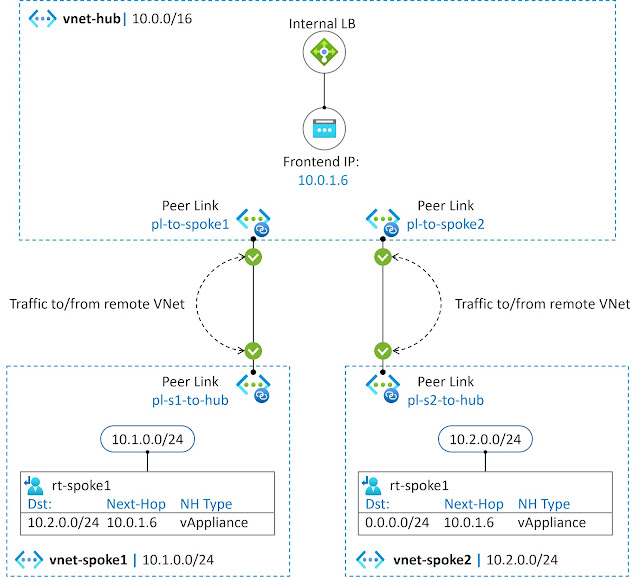

Figure 5-1 illustrates our example topology, which consists of a vnet-hub and spoke VNets. The ILB is associated with the subnet 10.0.1.0/24, where we allocate a Frontend IP address (FIP) using dynamic or static methods. Unlike a public load balancer's inbound rules, we can choose the High-Availability (HA) ports option to load balance all TCP and UDP flows. The backend pool and health probe configurations remain the same as those used with a Public Load Balancer (PLB).

From the NVA perspective, the configuration is straightforward. We enable IP forwarding in the Linux kernel and virtual NIC but not pre-routing (destination NAT). We can use Post-routing policies (source NAT) if we want to hide real IP addresses or if symmetric traffic paths are required. To route egress traffic from spoke sites to the NVAs via the ILB, we create subnet-specific route tables in the spoke VNets. The reason why the "rt-spoke1" route table has an entry "10.2.0.0/24 > 10.0.1.6 (ILB)" is that vm-prod-1 has a public IP address used for external access. If we were to set the default route, as we have in the subnet 10.2.0.0/24 in "vnet-spoke2", the external connection would fail.

Internal Load Balancer’s Settings

Frontend IP Address

The figure below shows the settings of ILB. We have selected the subnet 10.0.1.0/24 and the dynamic IP address allocation method. This IP address is used as a frontend IP address in inbound rule (Figure 5-5).

Figure 5-3 shows the Backend pool, to which we have attached our NVAs.

Figure 5-4 shows the Health Probe settings. We use TCP/22 because Linux listens to SSH by default on all ports.

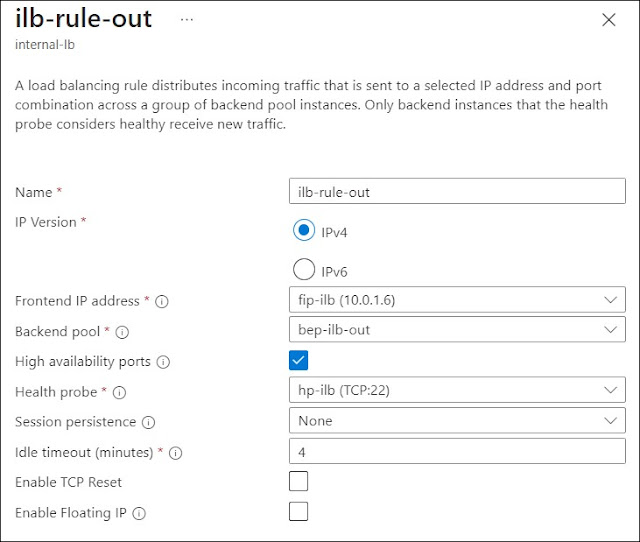

Figure 5-5 illustrates our example inbound rule, where the frontend IP address is associated with the backend pool and utilizes the health probe depicted in Figure 5-4. Besides, the “High availability ports” option has been selected to allow TCP/UDP flows. In summary, when a virtual machine (VM) sends traffic via the ILB using the frontend IP address 10.0.1.6 as the next-hop (defined in the subnet-specific route table), the ILB forwards the traffic to one of the healthy NVAs associated with the backend pool.

Figure 5-6 depicts the VNet peering Hub-and-Spoke topology. Our peer link policy permits traffic to be forwarded both from remote VNets and to remote VNets. Note that in this chapter, we are not utilizing a Virtual Network Gateway (VGW) or Route Servers (RS).

The example below shows the peering status from the vnet-hub perspective. The status of both peerings is Connected, and Gateway transit is disabled.

The example below shows the peering status from the vnet-spoke1 perspective. The status of both peerings is Connected, and Gateway transit is disabled.

User Defined Routing

As the final step, we create subnet-specific route tables for the spoke VNets. The route table "rt-spoke1", associated with the subnet 10.1.0.0/24, has a routing entry for subnet 10.2.0.0/24 with the next-hop 10.0.1.6 (ILB). Besides, we attach the route table "rt-spoke2" to the subnet 10.2.0.0/24. This route table contains a default route that directs traffic to the ILB.

Figures 5-10 and 5-11 show the User Define Routes (UDR) in route tables rt-spoke2 and rt-spoke1 with their associated subnets.

In Figure 5-12, we can observe the effective routes of vNIC vm-prod2621. It includes a UDR with the route 0.0.0.0/0, where the next-hop type/IP is Virtual Appliance/10.0.1.6 (ILB's frontend IP). The next-hop IP address belongs to vnet-hub IP space 10.0.0.0/16, which is reachable through the VNet peering connection. Figure 5-13 illustrates the effective routing from the perspective of vm-prod-11.

Figures 5-14 and 5-15 display the effective routes from the NVA’s perspective. Both NVAs have learned the subnets from the spokes via VNet peering connections. Note that the default route 0.0.0.0/0 with the Internet as the next-hop type is active on all vNICs, except for the one attached to vm-prod2 (Figure 5-12). This distinction arises because the NVAs and vm-prod-1 have a Public IP address, which they use for Internet connection, whereas vm-prod-2 does not.

Data Plane Test

Example 5-1 verifies that we have IP connectivity between vnet-spoke1 and vnet-spoke2.

azureuser@vm-prod-1:~$ ping 10.2.0.4 -c4PING 10.2.0.4 (10.2.0.4) 56(84) bytes of data.64 bytes from 10.2.0.4: icmp_seq=1 ttl=63 time=3.64 ms64 bytes from 10.2.0.4: icmp_seq=2 ttl=63 time=2.58 ms64 bytes from 10.2.0.4: icmp_seq=3 ttl=63 time=1.73 ms64 bytes from 10.2.0.4: icmp_seq=4 ttl=63 time=7.28 ms

--- 10.2.0.4 ping statistics ---4 packets transmitted, 4 received, 0% packet loss, time 3006msrtt min/avg/max/mdev = 1.726/3.803/7.275/2.115 msExample 5-1: Ping from vm-prod-1 to vm-prod-2.

Furthermore, Example 5-2 demonstrates that the Internal Load Balancer (ILB) forwards traffic to NVA1. Note that each ICMP request and reply message is shown twice in the tcpdump output. For example, the first ICMP echo request: IP (ICMP) 10.1.0.4 > 10.2.0.4, arrives at NVA1 from 10.1.0.4 via interface eth0. Subsequently, NVA1 sends the packet to 10.2.0.4 using the same interface.

azureuser@nva1:~$ sudo tcpdump -i eth0 host 10.2.0.4 or 10.1.0.4 -n -ttcpdump: verbose output suppressed, use -v or -vv for full protocol decodelistening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytesIP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 18, seq 1, length 64IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 18, seq 1, length 64IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 18, seq 1, length 64IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 18, seq 1, length 64IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 18, seq 2, length 64IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 18, seq 2, length 64IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 18, seq 2, length 64IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 18, seq 2, length 64IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 18, seq 3, length 64IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 18, seq 3, length 64IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 18, seq 3, length 64IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 18, seq 3, length 64IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 18, seq 4, length 64IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 18, seq 4, length 64IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 18, seq 4, length 64IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 18, seq 4, length 64^C16 packets captured16 packets received by filter0 packets dropped by kernel

Example 5-2: Tcpdump from NVA1.

Example 5-3 shows that NVA2 is not receiving traffic from vm-prod-1 or vm-prod-2.

azureuser@nva2:~$ sudo tcpdump -i eth0 host 10.2.0.4 or 10.1.0.4 -n -ttcpdump: verbose output suppressed, use -v or -vv for full protocol decodelistening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes^C0 packets captured0 packets received by filter0 packets dropped by kernelazureuser@nva2:~$Example 5-3: Tcpdump from NVA2.

Verification

Figure 5-16 shows the graphical view of the internal load balancer’s objects with their name. An Inbound rule ILB-RULE-OUT binds the frontend IP fip-ilb to the backend pool bep-ilb-out, into which we have associated NVAs.

Figure 5-17 confirms that the data path is available and NVAs in the backend pool are alive. Figures 5-17 and 5-18 prove that the ILB is in the data path.

Failover Test

The failover test is done by shutting down the NVA1, which ILB uses for the data path between vm-prod-1 (vnet-spoke1) and vm-prod-2 (vnet-spoke2). The ping statistics show that the failover causes five packets loss and nine errors (generated by the ICMP redirect messages not shown in the outputs).

azureuser@vm-prod-1:~$ ping 10.2.0.464 bytes from 10.2.0.4: icmp_seq=55 ttl=63 time=3.08 ms64 bytes from 10.2.0.4: icmp_seq=56 ttl=63 time=3.73 ms64 bytes from 10.2.0.4: icmp_seq=57 ttl=63 time=3.01 ms64 bytes from 10.2.0.4: icmp_seq=63 ttl=63 time=3.84 ms<snipped>--- 10.2.0.4 ping statistics ---66 packets transmitted, 61 received, +9 errors, 7.57576% packet loss, time 65220msrtt min/avg/max/mdev = 1.507/2.962/8.922/1.152 msazureuser@vm-prod-1:~$Example 5-4: Ping from vm-prod-1 to vm-prod-2.

By comparing the highlighted and bolded time stamps in Examples 5-5 and 5-6, we can see that the failover time is approximately 7 seconds.

azureuser@nva1:~$ sudo tcpdump -i eth0 host 10.2.0.4 or 10.1.0.4 -ntcpdump: verbose output suppressed, use -v or -vv for full protocol decodelistening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes08:09:35.778873 IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 24, seq 28, length 6408:09:35.778928 IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 24, seq 28, length 6408:09:35.779890 IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 24, seq 28, length 6408:09:35.779900 IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 24, seq 28, length 64<snipped>08:10:02.819831 IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 24, seq 55, length 6408:10:02.819871 IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 24, seq 55, length 6408:10:02.821394 IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 24, seq 55, length 6408:10:02.821403 IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 24, seq 55, length 6408:10:03.821441 IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 24, seq 56, length 6408:10:03.821480 IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 24, seq 56, length 6408:10:03.823456 IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 24, seq 56, length 6408:10:03.823472 IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 24, seq 56, length 64Connection to 20.240.138.91 closed by remote host.Connection to 20.240.138.91 closed.Example 5-5: Tcpdump from NVA1.

azureuser@nva2:~$ sudo tcpdump -i eth0 host 10.2.0.4 or 10.1.0.4 -ntcpdump: verbose output suppressed, use -v or -vv for full protocol decodelistening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes08:10:10.947115 IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 24, seq 63, length 6408:10:10.947179 IP 10.1.0.4 > 10.2.0.4: ICMP echo request, id 24, seq 63, length 6408:10:10.948768 IP 10.2.0.4 > 10.1.0.4: ICMP echo reply, id 24, seq 63, length 64Example 5-6: Tcpdump from NVA2.

No comments:

Post a Comment