A modern application typically comprises several modules, each assigned specific roles and responsibilities within the system. Application architecture governs the interactions and communications between these modules and users. One prevalent architecture is the three-tier architecture, encompassing the Presentation, Application, and Data tiers. This book explains how you can build a secure and scalable networking environment for your applications running in Microsoft Azure. Besides a basic introduction to Microsoft Azure, the book explains various solutions for Virtual Machines Internet Access, connectivity, security, and scalability perspectives.

The structure of each chapter is consistent. Each chapter begins with an Introduction, which introduces the solution and presents the topology diagram. Following that, you will learn how to deploy the service using the Azure portal. Additionally, several chapters include deployment and verification examples using Azure CLI or Azure PowerShell.

Figure 1 illustrates the various resources and services introduced in the book. While the diagram doesn't explicitly cover Azure networking best practices, it does highlight the relationships between different building blocks. If you're new to Azure networking, the picture might appear complex initially. Nevertheless, by the time you complete the book, it should become thoroughly understandable.

Table of Contents

Chapter 1: Azure Virtual Network Basics 1

Introduction 1

Geography, Region, and Availability Zone 1

Resource Groups and Resources 2

Create Resource Group with Azure Portal 4

Create VNet with Azure Portal 11

Deploy VNet Azure Resource Manager Templates 18

Pre-Tasks 19

Deployment Template for VNet 21

Deployment Parameters for VNet 26

Deploying Process 29

Summary 36

References 37

Chapter 2: Network Security Groups (NSG) 40

Introduction 40

VM to NSG Association 42

Step-1: Deploy VM vm-Bastion 42

Step-2: SSH connection to VM 56

NSG to Subnet Association 60

Step-1: Create New NSG 61

Step-2: Add an Inbound Security Rule to NSG 62

Step-3: Associate the NSG to Subnet 64

Application Security Group 67

Step-1: Create Application Security Group 67

Step-2: Add a Security Rule into NSG 68

Step-3: Associate VM’s NIC with ASG 69

Step-4: Test Connection 72

Resources View 73

Pricing 77

References 78

Chapter 3: Internet Access with VM-Specific Public IP 80

Introduction 80

Public IP Address for the Internet Access 81

Public IP Allocation Method 82

Stock-Keeping Unit (SKU) 82

Public IP Verification 83

Internet Outbound Traffic Testing 85

Public IP Addresses for Azure Internal Communication 86

References 90

Chapter 4: Virtual Network NAT Service - NAT Gateway 91

Introduction 91

Create Public IP address 92

Create NAT Gateway 95

Basic Settings 96

Outbound IP Address 97

VNet and Subnet Association 98

Deploying 99

Verification 100

Pricing 104

Delete NAT Gateway 105

References 107

Chapter 5: Hybrid Cloud - Site-to-Site VPN 109

Introduction 109

Create GatewaySubnet 110

Create Virtual Network Gateway (VGW) 111

Create Local Gateway (LGW) 119

Create VPN Connection 123

Configure Local Gateway 128

Download Configuration File 128

Configure Local Gateway 133

Verification 134

Data Plane Testing 137

Pricing 138

References 139

Chapter 6: Hybrid Cloud – Site-to-Site VPN with BGP 141

Introduction 141

Enable BGP on VGW 142

Enable BGP on LGW 144

Enable BGP on S2S VPN Connection 146

Configure BGP on LGW 148

Control Plane Verification on VGW 149

Control Plane Verification on LGW 153

References 155

Chapter 7: VNet-to-VNet VPN 157

Introduction 157

VGW Settings 158

Connection Settings 159

Control Plane Verification 163

References 170

Chapter 8: VNet Peering 171

Introduction 171

Deploy VNet Peering 173

Verification 176

VNet Peering 176

Control Plane - BGP 180

The RIB Associated with NIC vm-spoke-2739 182

The RIB Associated with NIC vm-spoke-1214 183

The RIB Associated with NIC vm-front-1415 184

Data Plane Verification 185

Transit VNet – Hub and Spoke Topology 186

Route Propagation 187

Pricing 190

References 191

Chapter 9: Hybrid Cloud - Routing Studies 192

Introduction 192

BGP Operation 194

Routing Process: on-Prem DC Subnet 10.11.11.0/24 196

Routing Process: VNet CIDR 10.0.0.0/16 203

Data Plane Test Between on-prem DC and Azure 206

Azure Internal Data Plane Verification 206

References 207

Chapter 10 - Appendix A: On-Prem DC – BGP Configuration 208

Lgw-dc1 208

Lgw-dc2 209

Leaf-01 209

Chapter 10 - Appendix B: Azure – BGP Configuration 210

Lgw-dc1 JSON View 210

Lgw-dc2 JSON View 210

Vgw-hub1 JSON View 211

Vgw-hub2 JSON View 212

Chapter 10: Virtual WAN Part 1 - S2S VPN and VNet Connections 214

Introduction 214

Create Virtual WAN (vWAN) 216

Create Virtual Hub and S2S VPN GW 219

Verifying S2S VPN Gateway 224

Create VPN Site 227

VPN site to vHub connection 233

Configure the Remote Site Edge Device 239

VNet to vHub connection 245

Control Plane verification 249

VNet Route Table 249

Virtual Hub Route Table 251

VPN Gateway Routing 251

Branch Control Plane 254

Data Plane verification 256

Pricing 257

References 258

Chapter 11 - Appendix A: Swe-Branch Configuration 259

Chapter 11: Virtual WAN Part 2 –VNet Segmentation 261

Introduction 261

Default Route Table 262

Create New Route Table 266

Control Plane Verification 271

Data Plane Testing 276

References 277

Chapter 12: Virtual WAN Part III - Global Transit Network 279

Introduction 279

Create a New vHub: vhub-ger 280

Create a New VPN Site: ger-branch 281

Control Plane verification 283

vHub Effective Routes 284

vNIC Effective Routes 285

Branch Site Routing 287

BGP Multipathing 289

Data Plane verification 290

Intra-Region Branch-to-VNet 290

Inter-Region Branch-to-VNet (Branch-to-Hub-to-Hub-Vnet) 292

Inter-Region Branch-to-Branch (Branch-to-Hub-to-Hub-Branch) 293

Intra-Region VNet-to-VNet 294

Inter-Region VNet-to-VNet (VNet-to-Hub-to-Hub-Vnet) 295

References 296

Chapter 13 - Appendix A: Ger-Branch Configuration 297

Chapter 13: ExpressRoute 301

Introduction 301

Create a New ExpressRoute Circuit 304

ERP Circuit Provision 307

Configure eBGP Peering with MSEE 310

Connect VNet to ExpressRoute Circuit 313

Create GatewaySubnet 313

Configure ExpressRoute Gateway 314

Connect VNet to Circuit 315

Appendix A. CE-Bailey Cfg and Show Commands 316

References 317

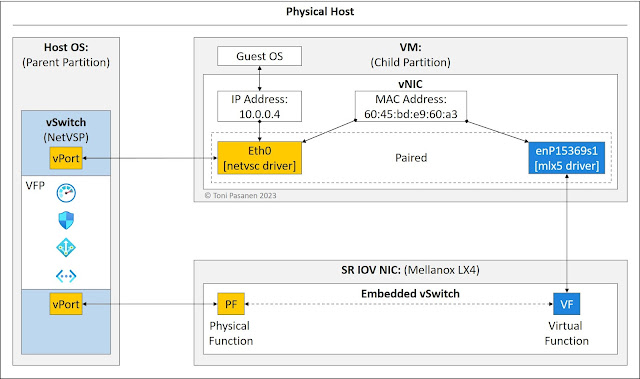

Chapter 14: Azure VM networking – Virtual Filtering Platform 319

Introduction 319

Hyper-V Extensible Virtual Switch 320

Virtual Filtering Platform - VFP 320

Policy Programming to VFP 323

Accelerated Networking 324

Packet Walk 326

Enabling Accelerated Networking 327

Verification 328

References 331

Chapter 15: NVA Part I - NVA Between East-West Subnets 333

Introduction 333

Default Routing in Virtual Network 333

Route Traffic through the Network Virtual Appliance (NVA) 337

Create Route Table with Azure CLI 338

Add Routing Entry to Route Table 339

Create Route Table with Azure Portal 340

Add Routing Entry to Route Table 341

Associate Route Table with Subnet with Azure CLI 342

Associate Route Table with Subnet with Azure Portal 343

Enable IP Forwarding on NVA’s vNICs with Azure CLI 345

Enable IP Forwarding on NVA’s vNICs with Azure Portal 347

Enable IP Forwarding on Linux NVA 348

Data Plane testing 349

Appendix A – Chapter 15 352

Add a new vNIC to Virtual Machine 352

References 353

Chapter 16: NVA Part II - Internet Access with a single NVA 355

Introduction 355

Packet Walk 357

Deployment 359

Linux NVA 359

IP Forwarding on vNIC 360

Data Plane verification 361

References 363

Chapter 17: NVA Redundancy with Public Load Balancer 364

Introduction 364

Load balancer Configuration using Azure Portal 366

Basic Information 367

Frontend IP 369

Backend Pool 371

Inbound Rule 375

NVA Configuration 380

Enable IP Forwarding on NIC 380

Enable IP Forwarding on Linux NVA 381

Configuring destination NAT on Linux 382

Configuring source NAT on Linux 383

Packet Walk 385

References 388

Chapter 18: NVA Redundancy with ILB Spoke-to-Spoke VNet 390

Introduction 390

Internal Load Balancer’s Settings 392

Frontend IP Address 392

Backend Pool 393

Health Probes 393

Inbound Rule 394

VNet Peering 395

User Defined Routing 397

Data Plane Test 401

Verification 402

Failover Test 404

References 406

Chapter 19: NVA Redundancy with ILB, On-prem to Spoke VNet 407

Introduction 407

Packet Walk: SSH Session Initiation – TCP SYN 408

Packet Walk: SSH Session Initiation – TCP SYN-ACK 411

Configuration and Verification 413

Data Plane Testing Using Ping 419

References 421

Chapter 20: Cloud Scale Load Balancing 423

Introduction 423

Management & Control Plane – External Connections 424

Data Plane - External Connections 426

Data Plane and Control Plane for Outbound Traffic 428

Fast Path 430

References 433

Chapter 21: Virtual Network Manager - VNet Peering 434

Introduction 434

Create Virtual Network Manager 435

Create Network Group 439

Create Connectivity Configuration 444

Deploy Connectivity Configuration 450

Add VNets Dynamically to Network Group 456

Verification 460

Delete Policy 462

Pricing 466

References 467

Chapter 22: Network Manager and Security 469

Introduction 469

Create Security Admin Configuration 472

Create SAC Rule Collection 473

Deploy Security Admin Configuration 476

Security Admin Rules Processing 479

Verification 480

References 481

Chapter 23: Azure Firewall 482

Introduction 482

Create Azure Firewall 483

Define Firewall Policy Rule 490

Route Traffic to Firewall 492

Data Plane Testing 495

References 496